A significant advantage of using LLMs for insight extraction is the substantial efficiency gains. Traditional methods often involve manual exploration of the data, trial and error with SQL queries, and a lot of back-and-forth between data analysts and stakeholders. With LLMs and Langchain, we can automate much of this process, enabling us to retrieve relevant information quickly based on natural language queries.

One key challenge in working with large datasets is dealing with sensitive or private information. Many companies have strict security requirements that prevent them from using external services like OpenAI or Anthropic to process their data. This is where Llama 3 comes in - it allows us to run LLM-based SQL pipelines locally, ensuring that sensitive data remains secure within the organization's infrastructure.

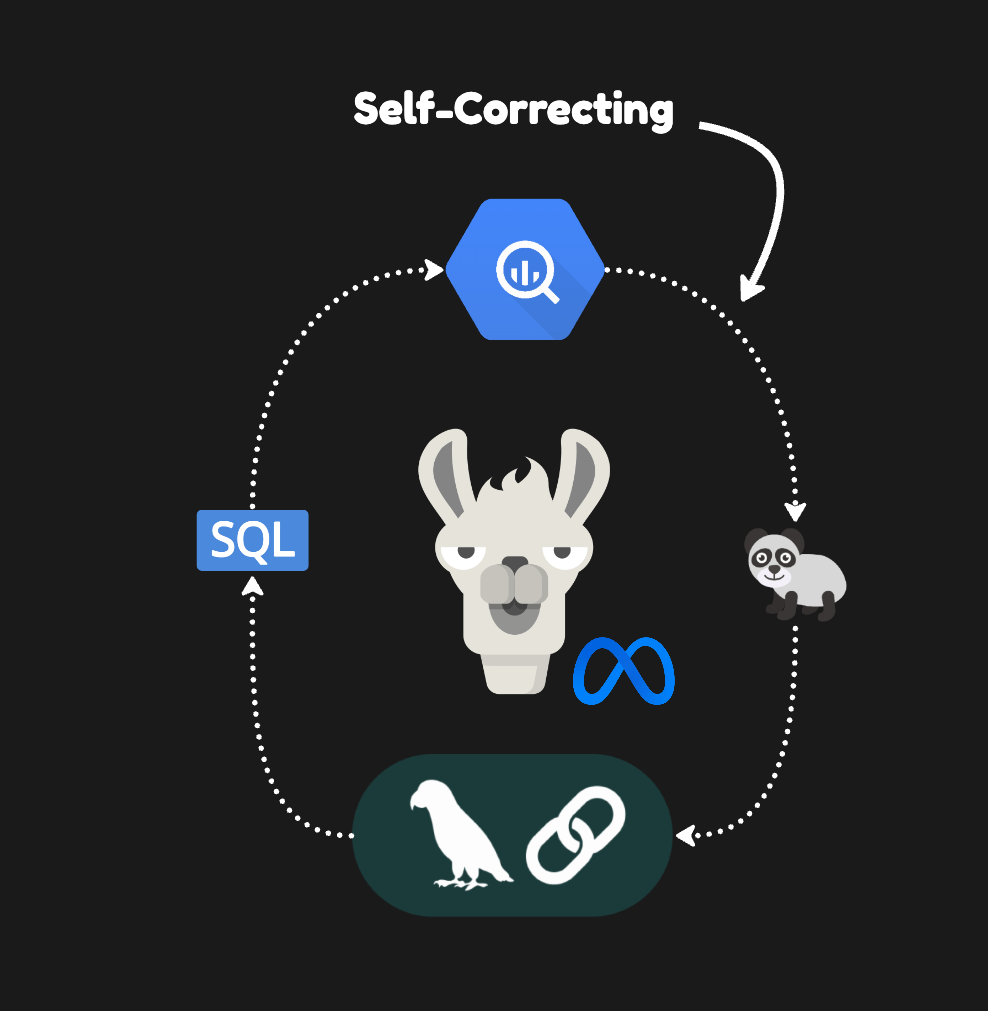

Generating SQL queries from natural language is not always a straightforward process, and there may be cases where the generated query is invalid or doesn't quite match the user's intent. To address this, I'll implement a retry mechanism that captures error messages and feeds them back into the chain. This allows the system to learn from its mistakes and generate more accurate queries in subsequent attempts.

🚀

By the end of this tutorial, you will have learned to set up a Langchain pipeline that dynamically utilizes Llama 3 to generate SQL queries. One of the key features of the approach taken in this tutorial approach is the ability to handle errors gracefully.

I'll use GroqCloud to test the model's data analysis capabilities to keep it simple

This post is for subscribers only

Sign up now to read the post and get access to the full library of posts for subscribers only.

Sign up now

Already have an account? Sign in